|

| Data Management Series |

Introduction

Around the various data islands existing in the blue and the models that support them are created a whole range of database objects (views, stored procedures, user-defined functions) and other type of non-database objects (classes, strong-typed datasets, reports, ad-hoc queries, etc.) With each reference to a database object is created a database dependency, or simply dependency, between the database object and the other objects that reference it, thus any change occurring in a database object could impact the various referents resulting in broken links, invalid calls or any type of error that might brake the calling applications or the isolated pieces of code (e.g. reports, ad-hoc queries, SQL scripts-based logic).

Tracking Database Dependencies

Many organizations use to document such dependencies in data dictionaries or any other type of similar documentation, one of the reasons being the easier identification of the objects that are impacted by the changes occurring in the database structure. One of the problems is that the documentation is often application-oriented, targeting thus the application using the data, and if there are multiple applications consuming the same data, then it’s not so easy to aggregate all the dependencies especially when they are stored in Excel files, dispersed documents, repositories, with (complicated) permissions access,

That’s one of the reasons for which an organization might consider storing in the source database as much of the business logic related directly to data. Encapsulating queries and procedural logic in views, stored procedures, user-defined functions or any other similar objects seems a good idea in order to reduce the maintenance of code, hide the complexity of a database from the consumers (users, services, web/desktop applications, etc.), and from several other considerations.

The most important of these considerations is the fact that databases store not only the respective objects and statistics about them, but could store also the dependencies between them, making easier the impact analysis or any type of analysis based on the dependency between objects. It’s at the discretion of developers, architects or any other type of professional with decision power on whether they want to take advantage of such functionality.

Foreign Key Constraints

The simplest and most natural dependence information to store are the primary-foreign key relations implemented in the form of a constraint. The foreign key constraints, as they are called, identify and enforce the relationship between two tables; “identify” because it makes the relation explicit, and “enforce” because it checks the validity of foreign-primary key values pairs when records are inserted, updated or deleted, enforcing thus the referential integrity of the database.

When a deletion is attempted on a record, the database engine checks if there is any dependent record (in the same or other table) that references the respective record, and if such a constraint is defined, the deletion is aborted raising also an error message. A check is performed also when a record is inserted or updated in the child table, the respective actions being aborted if the foreign key reference is not valid.

Conversely, a foreign key constraint could bring additional complexity during migration tasks, though with a little effort and a good architecture the overhead is minimized. In addition the foreign key constraints could be used by third party tools to provide some degree of automation when joining tables or for other purposes.

Object Dependencies

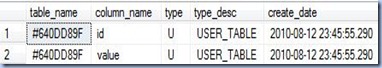

More complex dependency regard the dependency between database objects – views, stored procedures, user-defined functions or tables. In some cases is enough to see that there is a dependency between two objects, though in more complex situations would be useful to know which specific attribute is used from the dependency objects, especially when using the metadata for automation tasks. By creating the dependency tree, the tree of objects resulted from the dependency between the various database objects, it’s possible to provide more accurate impact assessments.

So until now were considered the dependencies between database objects, though, as highlighted above, there are many other objects stored outside of a database and referencing database objects. It makes sense to have a global repository in which to store information about dependencies, preferably in a relational database which could be easily interrogated using simple flat or hierarchical queries.

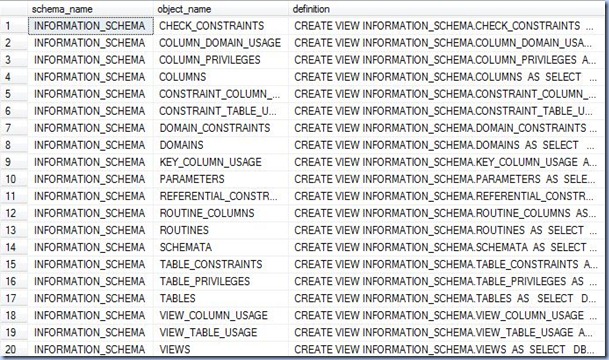

Metadata

The object definitions, statistics, dependencies and other type of information stored about data or the structures or objects related to data are encompassed under the denomination of metadata, which in common understanding is defined as “data about data”. The metadata could be used not only as input for impact analysis but also for automating business logic, functionality that opens new perspectives in development. Einstein’s believe that “problems cannot be solved by the same level of thinking that created them” is reflected in the world of databases by the fact that the metadata stored about database objects help to solved problems related to the objects and the data the databases contain. For example during a data migration project the two database structures could be mapped at table and attribute level, being possible thus to create validation rules in an automated manner.

Even if databases come with a predefined structure of storing metadata, the various solutions developed on top of such databases require additional metadata to be stored, and in theory it would be great if databases’ metadata structures could be extended for this purposes, though given the risks involved in altering such structures lead to the existence of parallel metadata repositories, in which an important percent of the database’s metadata are duplicated.

Beyond Database Dependencies

Talking about data mappings, integration projects and integration functionality/features rely heavily on data mappings, they involving a degree of automation too. Integration of data doesn’t necessarily occur only at application level, in the context of web’s evolution, the tendency is to link and integrate the various data islands (see linkeddata.org), especially the ones with public character, and provide thus cross-database functionality. Many of the problems such an approach implies are solved at metadata level, new metadata and dependency levels being required for this purpose.

Created: Aug-2010, Last Reviewed: Mar-2024