30 March 2010

The Power of Joins – Part I: Introduction

There are various ways of naming the tables involved in a join:

- left vs. right tables, where the left table is the first listed table participating in the join and the right table the second listed table participating in the join;

- parent vs. dependent tables, refers to tables between which exists a direct relationship, in other words exists a primary key and a foreign key, the dependent table being the table containing the foreign key, and the parent table the table containing the primary key;

- parent vs. child tables, is similar with the previous definition, the child table being an alternative naming for the dependent table, in addition we can talk also about grandchildren when a child table is referenced at its turn by other table called thus, when the three tables are considered together, the grandchild table;

- referent vs. referenced tables, another alternative for naming the tables between which exists a direct relationship, the referenced table being the table containing the foreign key, and the referent table the ones containing the primary key;

- inner vs. outer tables, these terms are used only in the case of outer joins, the inner table being the table from which are selected all the records, while the outer table is the table from which are selected only the matched records, therefore the two are also called sometimes the row-preserving table, respectively the null-supplying table;

None of the above pair of naming conventions are perfect because they can’t be used to address all the join situations in which tables are involved, and some of the terms may be used interchangely, especially when a term is preferred in order to send across adequate meaning, for example referring to a table as a inner table then we imply also that we deal with a left or right join. I realized that in time I used all the the above terms in a situation or another, however I prefer to use the left vs. right tables denomination, and occasionally inner vs. outer tables when referring to left or right joins.

In addition to the two tables joined a query typically includes also a join operator that specifies the join type (union, inner, outer or cross join), the join constraints that specifies which attributes are used to join the tables and the logical operators used, and eventually the non-join constraints referring to other constraints than the join constraints. I’m saying typically because either of the two could be missing, thus if the the join constraint and operator miss then we deal with a carthezian join, while if the join constraint is appearing in the WHERE instead of FROM clause then there is no need to specify the join operator or in case of a UNION there is no need to specify the join constraint, though I will come back later on this topic.

The work with joins is deeply rooted in the Set Theory (the tables joined referred also as datasets) and the set operations – union, subtraction and intersection, their projection in world of databases coming, from my point of view and not sure if the database literature really discusses about this, into two flavors – vertical vs. horizontal joining. By vertical joining I’m referring to the intrinsic character of joins, in the result dataset the attributes from the two joined datasets appearing on the same row, while in the horizontal joining they never appear on the same row, thus a row being formed exclusively from the records of only one of the tables involved in the join. Thus we can discuss about vertical/horizontal joins as projection of union, intersection and subtraction operations, each of them with different connotations, the horizontal operations being actually similar to the ones from set theory. If we discuss about joins then we inevitably we have to mention also the anti-joins and semi-joins, a special type of queries based on NOT IN, respectively EXISTS operators.

25 March 2010

Business Intelligence: A Reporting Guy’s Issues

|

| Business Intelligence Series |

During the past 3 years I have been supporting mainly Oracle e-Business Suite (EBS) users with reports and knowledge about the system, and therefore most of the issues were related to it. In addition to its metadata system implemented in system table structure, Oracle tried to build an external metadata system for its ERP system, namely Metalink (I think it was replaced last year), though there were/are still many gaps in documentation. It’s true that many such gaps derive from the customizations performed when implementing the ERP system, though I would estimate that they qualify only for 20% of the gaps and refer mainly to the Flex Fields (customer-defined fields) used for the various purposes.

There's the belief that everybody can write a query and/or of an ad-hoc report. It’s true that writing a query is a simple task and in theory anybody could learn to it without much of effort, though there are other aspects related to Software Engineering and Project Management, respectively related to a data professional's experience than need to be considered. There are aspects like choosing the right data source, right attributes and level of detail, design the query or solution for the best performance (eventually building an index or using database objects that allow better performance) and reuse, use adequate business rules (e.g. ignoring inactive records or special business cases), synchronize the logic with other reports otherwise two people will show the management distinct numbers, mitigate the Data Quality and Deliverables Quality issues, identify the gaps between reports, etc.

When multiple developers are approaching reporting requirements they should work as a team and share knowledge not only on the legacy system but also on users’ requirements, techniques used and best practices. Especially when they are dispersed all over the globe, I know it’s difficult to bring cohesion in a team, make people produce deliverables as if they were written by the same person, though not so impossible to achieve with a little effort from all the parties involved.

Outsourcing is a hot topic these days, when in the context of the current economic crisis organizations are forced to reduce the headcount and cut costs, and thus this has inevitably touched also the reporting area. Outsourcing makes sense when the interfaces between service providers and the customers are well designed and implemented. Beyond the many benefits and issues outsourcing approaches come with, people have to consider that for a developer to create a report is needed not only knowledge about the legacy systems and tools used to extract, transform and prepare the data, but also knowledge about the business itself, about users expectations and organization’s culture, the latter two points being difficult to experience in a disconnected and distributed environment.

Data Warehousing: Data Warehouse (A Personal Journey)

Any discussion on data warehousing topics, even unconventional, can’t avoid to mention the two most widely adopted concepts in data warehousing, B. Inmon vs. R. Kimball’s methodologies. There is lot of ink consumed already on this topic and is difficult to come with something new, however I can insert in between my experience and personal views on the topic. From the beginning I have to state that I can’t take any of the two sides because from a philosophical viewpoint I am the adept of “the middle way” and, in addition, when choosing a methodology we have to consider business’ requirements and objectives, the infrastructure, the experience of resources, and many other factors. I don’t believe one method fits all purposes, therefore some flexibility is needed into this concern even from most virulent advocates. After all in the end it counts the degree to which the final solution fits the purpose, and no matter how complex and perfect is a methodology, no matter of the precautions taken, given the complexity of software development projects there is always the risk for failure.

B. Inmon defines the data warehouse as a “subject-oriented, integrated, non-volatile and time-varying collection of data in support of the management’s decisions” [3] - subject-oriented because is focused on an organization’s strategic subject areas, integrated because the data are coming from multiple legacy systems in order to provide a single overview, time-variant because data warehouse’s content is time dependent, and non-volatile because in theory data warehouse’s content is not updated but refreshed.

Within my small library and the internet articles I read on this topic, especially the ones from Kimball University cycle, I can’t say I found a similar direct definition for data warehouse given by R. Kimball, the closest I could get to something in this direction is the data warehouse as a union of data marts, in his definition a data mart is “a process-oriented subset of the overall organization’s data based on a foundation of atomic data, and that depends only on the physics of the data-measurement events, not on the anticipated user’s questions” [2]. This reflects also an important difference between the two approaches, in Inmon’s philosophy the data marts are updated through the data warehouse, the data in the warehouse being stored in a 3rd normal form, while in data marts are multidimensional and thus denormalized.

Even if it’s a nice conceptual tool intended to simplify data manipulation, I can’t say I’m a big fan of dimensional modeling, mainly because it can be easily misused to create awful (inflexible) monster models that can be barely used, sometimes being impossible to go around them without redesigning them. Also the relational models could be easily misused though they are less complex as physical design, easier to model and they offer greater flexibility even if in theory data’s normalization could add further complexity, however there is always a trade between flexibility, complexity, performance, scalability, usability and reusability, to mention just a few of the dimensions associated with data in general and data quality in particular.

In order to overcome dimensional modeling issues R. Kimball recommends a four step approach – first identifying the business processes corresponding to a business measurement or event, secondly declaring the grain (level of detail) and only after that defining the dimensions and facts [1]. I have to admit that starting from the business process adds a plus to this framework because in theory it allows better visibility over the processes, supporting processed-based data analysis, though given the fact that a process could span over multiple data elements or that multiple processes could partition the same data elements, this increases the complexity of such models. I find that a model based directly on the data elements allows more flexibility in the detriment of the work needed to bring the data together, though they should cover also the processes in scope.

Building a data warehouse it’s quite a complex task, especially if we take into consideration the huge percentage of software projects failure that holds also in data warehousing area. On the other side not sure how much such statistics about software projects failure can be taken ad literam because different project methodologies and data collection methods are used, not always detailed information are given about the particularities of each project, it would be however interesting to know what the failure rate per methodology. Occasionally there are some numbers advanced that sustain the benefit of using one or another methodology, and ignoring the subjective approach of such justifications they often lack adequate details to support them.

My first contact with building a data warehouse was almost 8 years ago, when as part of the Asset Management System I was supposed to work on, the project included also the creation of a data warehouse. Frankly few things are more scaring than seeing two IT professionals fighting on what approach to use in order to design a data warehouse, and is needless to say that the fight lasted for several days, calls with the customer, nerves, management involved, whole arsenal of negotiations that looked like a never ending story.

Such fights are sometimes part of the landscape and they should be avoided, the simplest alternative being to put together the advantages and disadvantages of most important approaches and balance between them, unfortunately there are still professionals who don’t know how or not willing to do that. The main problem in such cases is the time which instead of being used constructively was wasted on futile fights. When lot of time is waisted and a tight schedule applies, one is forced to do the whole work in less time, leading maybe to sloppy solutions.

A few years back I had the occasion to develop one data warehouse around the two ERP systems and the other smaller systems one of the customers I worked for was having in place, SQL Server 2000 and its DTS (Data Transformation Services) functionality being of great help for this purpose. Even if I was having some basic knowledge on the two data warehousing approaches, I had to build the initial data warehouse from scratch evolving the initial solution in time along several years.

The design was quite simple, the DTS packages extracting the data from the legacy systems and dumping them in staging tables in normalized or denormalized form, after several simple transformations loading the data in the production tables, the role of the multidimensional data marts being played successfully by views that were scaling pretty well to the existing demands. Maybe many data warehouse developers would disregard such a solution, though it was quite an useful exercise and helped me to easier understand later the literature on this topic and the issues related to it. In addition, while working on the data conversion of two ERP implementations I had to perform more complex ETL (Extract Transform Load) tasks that the ones consider in the data warehouse itself.

In what concerns software development I am an adept of rapid evolutional prototyping because it allows getting customers’ feedback in early stages and thus being possible to identify earlier the issues as per customers’ perceptions, in plus allowing customers to get a feeling of what’s possible, how the application looks like. The prototyping method proved to be useful most of the times, I would actually say all the times, and often was interesting to see how customers’ conceptualization about what they need changed with time, changes that looked simple leading to partial redesign of the application. In other development approaches with long releases (e.g. waterfall) the customer gets a glimpse of the application late in the process, often being impossible to redesign the application so the customer has to live with what he got. Call me “old fashion” but I am the adept of rapid evolutional prototyping also in what concerns the creation of data warehouses, and even if people might argue that a data warehousing project is totally different than a typical development project, it should not be forgotten that almost all software development projects share many particularities from planning to deployment and further to maintenance.

Even if also B. Inmon embraces the evolutional/iterative approach in building a data warehouse, from a philosophical standpoint the rapid evolutional prototyping applied to data warehouses I feel it’s closer to R. Kimball’s methodology, resuming in choosing a functional key area and its essential business processes, building a data mart and starting from there building other data marts for the other functional key areas, eventually integrating and aligning them in a common solution – the data warehouse. On the other side when designing a software component or a module of one application you have also to consider the final goal, as the respective component or module will be part of a broader system, even if in some cases it could exist in isolation. Same can be said also about data marts’ creation, even if sometimes a data mart is rooted in the needs of a department, you have to look also at the final goal and address the requirements from that perspective or at least be aware of them.

Previous Post <<||>> Next Post

References:

[1] M. Ross R. Kimball, (2004) Fables and Facts: Do you know the difference between dimensional modeling truth and fiction? [Online] Available from: http://intelligent-enterprise.informationweek.com/info_centers/data_warehousing/showArticle.jhtml;jsessionid=530A0V30XJXTDQE1GHPSKH4ATMY32JVN?articleID=49400912 (Accessed: 18 March 2010)

[2] R. Kimball, J. Caserta (2004). The Data Warehouse ETL Toolkit: Practical Techniques for Extracting, Cleaning, Conforming, and Delivering Data. Wiley Publishing Inc. ISBN: 0-7645-7923 -1

[3] Inmon W.H. (2005) Building the Data Warehouse, 4th Ed. Wiley Publishing. ISBN: 978-0-7645-9944-6

24 March 2010

Knowledge Management: Definitions I (The Stored Procedure Case)

From the few books I roughly reviewed on SQL Server-related topics I liked P. Petrovic’s approach for defining the stored procedure, he introducing first the batch defined as “a sequence of Transact-SQL statements and procedural extensions” that “can be stored as a database object, as either a stored procedure or UDF [3]. Now even if I like the approach I’m having a problem on how he introduced the routine because he haven’t gave a proper definition and just mentioned that a routine can be either a stored procedure or UDF [3]. On the other side it’s not always necessary to introduce terms part of the common shared conceptual knowledge, though I find it useful and constructive if such a definition would have been given.

A definition should be clear, accurate, comprehensive, simple and should avoid confusion, eventually by specifying the intrinsic/extrinsic characteristics that differentiate the defined object from other objects, and the context in which is used. Unfortunately there are also definitions given by professionals that don't meet this criteria, for example A. Watt defines a stored procedure as "a module of code that allows you to reuse a desired piece of functionality and call that functionality by name" [5], while R. Dewson defines it as “a collection of compiled T-SQL commands that are directly accessible by SQL Server” [2], R. Rankins et. al as “one or more SQL commands stored in a database as an executable object” [4] or D. Comingore et Al “stored collections of queries or logic used to fulfill a specific requirement” [1]. All definitions are valid and in spite similarities I find them incomplete because they could be used as well for defining an UDF.

Of course, by reading the chapter or the whole book in which the definition is given, by comparing the concept with other concepts, people arrive to a more accurate form of the definition(s), though that’s not always efficient and constructive because the reader has to “fish” for all the direct-related concepts and highlight the similarities/differences between them (e.g. stored procedures vs. UDF vs. views vs. triggers). Usually for easier assimilation and recall I like to use Knowledge Mapping structures/techniques like Mind Maps or Concept Maps that allows seeing the relations (including similarities/differences) between concepts and even identify new associations between them. In addition, when learning concepts it matters also the form/sequence in which the concepts are presented (maybe that’s why many people prefer a PowerPoint presentation than reading a whole book).

Actually a definition could be built starting from the identified characteristics/properties of concepts and the similarities/differences with other concepts For example Bill Inmon defines the data warehouse as “a subject-oriented, integrated, time-varying, non-volatile collection of data in support of the management's decision-making process” and even if we could philosophy also on this subject and the intrinsic characteristics of a data warehouse, it reflects Bill Inmon’s conception in a clear, simplistic and direct manner. I could attempt to define a stored procedure using the following considerations:

- it encapsulates T-SQL statements and procedural extensions (input/output parameters, variables, loops, etc.);

- it can be executed as a single statement and thus not reused in other DML or DDL statements;

- it’s a database object;

- caches, optimizes and reuses query execution plans;

- allows code modularization allowing thus code reuse, easier/centralized code maintenance, move business logic (including validation) on the backend;

- minimizes the risks of SQL injection attacks;

- can return multiple recordsets and parameterized calls reducing thus network traffic;

- enforce security by providing restricted access to tables;

- allows some degree of portability and standardized access (given the fact that many RDBMS feature stored procedures).

- allows specifying execution context;

- allows using output parameters, cursors, temporary tables, nested calls (procedure in procedure), create and execute dynamic queries, access to system resources, trap and customize errors, perform transaction-based operations.

Some of the mentioned characteristics apply also to other database objects or they are not essential to be mentioned in a general definition, thus giving a relatively accurate definition for stored procedures is not an easy task. Eventually I could focus on the first three points mentioned above, thus an approximate definition would reduce to the following formulation: “a stored procedure is a database object that encapsulates T-SQL statements and procedural extensions, the object being executed exclusively within a single statement using its name and the eventual parameters”. This definition might not be the best, though it’s workable and could be evolved in case new knowledge is discovered or essential new functionality changes are introduced.

Note:

Unfortunately in several books I started to read recently on data warehousing topics I found similar incomplete/vague definitions that, from my point of view, are not adequate given the complexity of the subjects exposed, letting thus lot of place for divagations. Of course, in such technical books the weight is more on the use of exposed concepts rather on concepts’ definition, though I’m expecting more from this type of books!

Disclaimer:

I’m not trying to denigrate the impressive work of other professionals, and I know that I’m making even more mistakes than other people do, I’m just trying to point out a fact I remarked and I consider as important: trying to give a proper accurate definition of the terms introduced in a book or any other form of communication.

References:

[1] Comingore D., Hinson D. (2006). Professional SQL Server™ 2005 CLR Programming. Wiley Publishing. ISBN: 978-0-470-05403-1.

[2] Dewson R. (2008). Beginning SQL Server 2008 for Developers: From Novice to Professional. Apress. ISBN: 978-1-4302-0584-5

[3] Petkovic D. (2008). Microsoft® SQL Server™ 2008: A Beginner’s Guide. McGraw-Hill. ISBN: 0-07-154639-1

[4] Rankins R., Bertucci P., Gallelli C., Silverstein A.T, (2007) Microsoft® SQL Server 2005 Unleashed. Sams Publishing. ISBN: 0-672-32824-0

[5] Watt A. (2006). Microsoft SQL Server 2005 for Dummies. Wiley Publishing. ISBN: 978-0-7645-7755-0.

[6] Inmon W.H. (2005) Building the Data Warehouse, 4th Ed. Wiley Publishing. ISBN: 978-0-7645-9944-6

23 March 2010

Data Warehousing: Data Transformation (Definitions)

"A set of operations applied to source data before it can be stored in the destination, using Data Transformation Services (DTS). For example, DTS allows new values to be calculated from one or more source columns, or a single column to be broken into multiple values to be stored in separate destination columns. Data transformation is often associated with the process of copying data into a data warehouse." (Microsoft Corporation, "SQL Server 7.0 System Administration Training Kit", 1999)

"The process of reformatting data based on predefined rules. Most often identified as part of ETL (extraction, transformation, and loading) but not exclusive to ETL, transformation can occur on the CDI hub, which uses one of several methods to transform the data from the source systems before matching it." (Evan Levy & Jill Dyché, "Customer Data Integration", 2006)

"Any change to the data, such as during parsing and standardization." (Danette McGilvray, "Executing Data Quality Projects", 2008)

"A process by which the format of data is changed so it can be used by different applications." (Judith Hurwitz et al, "Service Oriented Architecture For Dummies" 2nd Ed., 2009)

"Converting data from one format to another|making the data reflect the needs of the target application. Used in almost any data initiative, for instance, a data service or an ETL (extract, transform, load) process." (Tony Fisher, "The Data Asset", 2009)

"Changing the format, structure, integrity, and/or definitions of data from the source database to comply with the requirements of a target database." (DAMA International, "The DAMA Dictionary of Data Management", 2011)

"The SSIS data flow component that modifies, summarizes, and cleans data." (Microsoft, "SQL Server 2012 Glossary", 2012)

"Data transformation is the process of making the selected data compatible with the structure of the target database. Examples include: format changes, structure changes, semantic or context changes, deduping, and reordering." (Piethein Strengholt, "Data Management at Scale", 2020)

"1. In data warehousing, the process of changing data extracted from source data systems into arrangements and formats consistent with the schema of the data warehouse. 2. In Integration Services, a data flow component that aggregates, merges, distributes, and modifies column data and rowsets." (Microsoft Technet)

17 March 2010

ERP Implementations: Preventing ERP Implementation Projects' Failure

- not understanding what an ERP is about - functionality and intrinsic requirements;

- not evaluating/assessing ERP's functionality beforehand;

- not getting the accept/involvement of all stakeholders + politics;

- not addressing the requirements beforehand, especially in the area of processes;

- not evolving/improving your processes;

- not addressing the data cleaning/conversion adequately;

- not integrating the ERP with the other solutions existing in place;

- not having in place a (Master) Data Management vision/policy that addresses especially data quality and data ownership;

- not involving the (key) users early in the project;

- not training and motivating adequately the users;

- lack of a reporting framework, set of reports (reporting tools) that enables users to make most of the ERP;

- lack of communication between business and IT professionals;

- relying too much on external resources, especially in the PM area;

- the migration of workforce inside the organization or outside (e.g. consultants coming and leaving);

- inadequate PM, lack of leadership;

- the lack of a friendly User Interface (referring to the ERP system itself);

- inadequate post-Go Live support from the ERP vendor and business itself;

- the lack of an ERP to evolve with the business;

- too many defects in the live system (results often from inadequate testing but also vendor related issues);

- too many changes on the last 100 m of the project;

- organization's culture;

- attempting to do too much in lesser time than needed/allocating more time than needed.

On the same list I would also add the following reasons:

- not understanding business’ needs and the business as a whole;

- the inadequate choice/use of methodologies related to PM, Software Development, Data Quality in particular and Data/Knowledge Management in general;

- ignoring Software Projects’ fallacies in general and other ERP projects’ failure causes in particular (not learning from others’ mistakes);

- ignoring best practices in the ERP/Software Development/Project Management/Data Management fields;

- not having a Risk Mitigation (Response) Plan [falls actually under inadequate PM but given its importance deserves to be mentioned separately];

- not addressing requirements equidistantly, as opposed to unilaterally (not becoming one department’s project or even one-man-show);

- ignoring special reporting requirements during implementation phase;

- unrealistic expectations vs. not meeting business’ expectations: ROI, incapacity to answer to business (decision making) questions (actually it refers mainly to the existing reports but also to the system itself when the needed data are not available at the needed grain);

- unable to quantify adequately the ROI for the ERP-system.

- not making expectations explicit and not communicating them on-time and in a clear manner;

- lack of meeting governmental requirements (SOX, IRS, etc).

- not monitoring Post-Go Live systems’ use/adoption (e.g. by defining Health/Growth metrics) and addressing adoption issues in-time;

- lack of mapping and transferring/distributing the knowledge existing related to the ERP system (experts, processes, documentation, reports, best practices, etc.);

- not integrating customer’ customers/vendors’ requirements/needs (supply chain vs. sales chain);

- using inadequate technologies/tools to solve ERP-related issues;

- the lack of ERP systems to be integrated with new advances in technologies ([3] refers it as technological convergence);

- the hidden costs of ERP implementations and Post-Go Live support;

- expecting IT/business to solve all the problems;

- over-customization of software [1];

- over-integration of software;

- not having a global technological view (on how the ERP fits in the technological infrastructure);

- underestimating project’s complexity

- unbalanced daily work vs. project workload [2];

- choosing wrong time for implementation [2];

- engaging in too many corporate projects [3];

- multiple vendors on the project [3];

- not having clear phases, deliverables, boundaries, accountability, quality control components[3], communication channels defined;

- not having an external project audit committee [3];

- having the management over- committed [3];

- inadequate skill sets [3].

Given the multiple mitigation solutions/approaches for each of the above causes and the interconnectedness between them, each of the above topics deserves further elaboration. There is also lot of philosophy involved, some of them are more important than the others, though all of them could be in time a cause for failure. Failure at its turn it’s a vague concept, highly dependent on context, an ERP implementation could be successful based on initial expectations but could fail to be adopted by the business, same as it can meet business expectations on short term but be not so flexible as intended on the long term. In the end it depends also on Users and Management’s perception, on the issues appearing after Go Live and their gravity, though many such issues are inherent, they are just a projection of the evolving business and system’s maturity.

Preventing the failure of an ERP implementation relies on the capacity of addressing all or most important of the above issues, being aware of them and making at least a Risk Mitigation Plan with a few possible solutions to address them. In a project of such a complexity and so many constraints, planning seems to be useless but is a good exercise, it prepares you for the work and events ahead!

References:

[1] Barton P., (2001). Enterprise Resource Planning: Factors Affecting Success and Failure. [Online] Available from: http://www.umsl.edu/~sauterv/analysis/488_f01_papers/barton.htm (Accessed: 17 March 2009)

[2] ERP Wire. (2009). Analyzing ERP failures in Hershey. [Online] Available from: http://www.erpwire.com/erp-articles/failure-story-in-erp-process.htm (Accessed: 17 March 2009)

[3] Madara E., (2007) A Recipe and Ingredients for ERP Failure. [Online] Available from: http://www.articlesbase.com/software-articles/a-recipe-and-ingredients-for-erp-failure-124383.html (Accessed: 17 March 2009)

16 March 2010

MS Office: Excel for SQL Developers IV (Differences Between Two Datasets)

Given two attributes ColumnX and ColumnY from tables A, respectively B, let’s look first on how the difference flag constraint could be written for each category:

--text attributes: CASE WHEN IsNull(A.ColumnX , '')<> ISNULL(B.ColumnY, '') THEN 'Y' ELSE 'N' END DiffColumnXYFlag --amount attributes: CASE WHEN IsNull(A.ColumnX, 0) - IsNull(B.ColumnY, 0) NOT BETWEEN -0.05 AND 0.05 THEN 'Y' ELSE 'N' END DiffColumnXYFlag --numeric attributes: CASE WHEN IsNull(A.ColumnX, 0) <> IsNull(B.ColumnY, 0) THEN 'Y' ELSE 'N' END DiffColumnXYFlag --date attributes: CASE WHEN IsNull(DateDiff(d, A.ColumnX, B.ColumnY), -1)<>0 THEN 'Y' ELSE 'N' END DiffColumnXYFlag

Notes:

1. Bit attributes can be treated as numeric as long as they are considered as having a bi-state, for tri-state values in which also NULL is considered as a distinct value then the constraint must be changed, the most natural way being to translate the NULL to –1:

CASE

WHEN IsNull(A.ColumnX, -1) <> IsNull(B.ColumnY, -1) THEN 'Y'

ELSE 'N'

END DiffColumnXYFlag

2. In most of the examples I worked with the difference between two pair dates, the difference was calculated at day level, though it might happen that is needed to compare the values at smaller time intervals to the order of hours, minutes or seconds. The only thing that needs to be changed then is the first parameter from DateDiff function. There could be also situations in which a difference of several seconds is acceptable, a BETWEEN operator could be used then as per the case of numeric vs. amount values.

3. In case one of the attributes is missing, the corresponding difference flag could take directly the value ‘N’ or ‘n/a’.

4. It could happen that there are mismatches between the attributes’ data type, in this case at least one of them must be converted to a form that could be used in further processing.

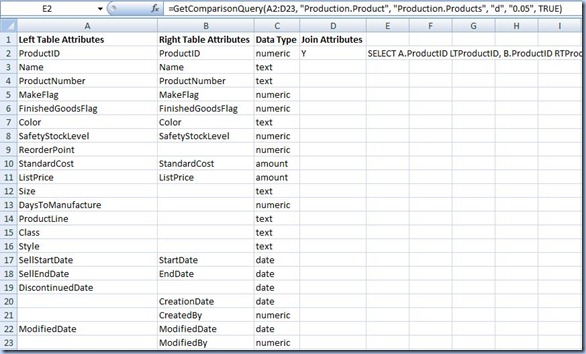

Thus a macro for this purpose would take as input a range with the list of attributes from the two tables, the data type category and the columns participating in the join constraint, two parameters designating the name of the left and right table participating in the FULL OUTER JOIN, the time interval considered, the error margin value interval (e.g. [-e, e]) and a flag indicating whether to show all combined data or only the records for which there is at least a difference found.

Function GetComparisonQuery(ByVal rng As Range, ByVal LeftTable As String, ByVal RightTable As String, ByVal TimeInterval As String, ByVal ErrorMargin As String, ByVal ShowOnlyDifferencesFlag As Boolean) As String 'builds the code for a comparison query between two tables Dim attributes As String Dim differences As String Dim constraint As String Dim whereConstraints As String Dim joinConstraints As String Dim columnX As String Dim columnY As String Dim index As Integer For index = 1 To rng.Rows.Count columnX = Trim(rng.Cells(index, 1).Value) columnY = Trim(rng.Cells(index, 2).Value) If Len(columnX) > 0 Or Len(columnY) > 0 Then If Len(columnX) > 0 Then attributes = attributes & ", A." & columnX & " LT" & columnX & vbCrLf End If If Len(columnY) > 0 Then attributes = attributes & ", B." & columnY & " RT" & columnX & vbCrLf End If constraint = "" If Len(Trim(rng.Cells(index, 4).Value)) = 0 Then If Len(columnX) > 0 And Len(columnY) > 0 Then 'creating the difference flag Select Case Trim(rng.Cells(index, 3).Value) Case "text": constraint = "CASE" & vbCrLf & _ " WHEN IsNull(A." & columnX & " , '') <> IsNUll(B." & columnY & ", '') THEN 'Y'" & vbCrLf & _ " ELSE 'N'" & vbCrLf & _ " END" Case "amount": constraint = "CASE" & vbCrLf & _ " WHEN IsNull(A." & columnX & " , 0) - IsNUll(B." & columnY & ", 0) NOT BETWEEN -" & ErrorMargin & " AND " & ErrorMargin & " THEN 'Y'" & vbCrLf & _ " ELSE 'N'" & vbCrLf & _ " END" Case "numeric": constraint = "CASE" & vbCrLf & _ " WHEN IsNull(A." & columnX & " , 0) <> IsNUll(B." & columnY & ", 0) THEN 'Y'" & vbCrLf & _ " ELSE 'N'" & vbCrLf & _ " END" Case "date": constraint = "CASE" & vbCrLf & _ " WHEN DateDiff(" & TimeInterval & ", A." & columnX & ", B." & columnY & ")<>0 THEN 'Y'" & vbCrLf & _ " ELSE 'N'" & vbCrLf & _ " END" Case Else: 'error MsgBox "Incorrect data type provided for " & index & " row!", vbCritical End Select If ShowOnlyDifferencesFlag Then whereConstraints = whereConstraints & " OR " & constraint & " = 'Y'" & vbCrLf End If differences = differences & ", " & constraint & " Diff" & columnX & "Flag" & vbCrLf Else differences = differences & ", 'n/a' Diff" & IIf(Len(columnX) > 0, columnX, columnY) & "Flag" & vbCrLf End If Else joinConstraints = joinConstraints & " AND A." & columnX & " = B." & columnY & vbCrLf End If End If Next If Len(attributes) > 0 Then attributes = Right(attributes, Len(attributes) - 2) End If If Len(joinConstraints) > 0 Then joinConstraints = Right(joinConstraints, Len(joinConstraints) - 8) End If If Len(whereConstraints) > 0 Then whereConstraints = Right(whereConstraints, Len(whereConstraints) - 4) End If 'building the comparison query GetComparisonQuery = "SELECT " & attributes & _ differences & _ "FROM " & LeftTable & " A" & vbCrLf & _ " FULL OUTER JOIN " & RightTable & " B" & vbCrLf & _ " ON " & joinConstraints & _ IIf(ShowOnlyDifferencesFlag And Len(whereConstraints) > 0, "WHERE " & whereConstraints, "") End Function

The query returned by the macro for the above example based on attributes from Production.Product table from AdventureWorks database and Production.Products table created in Saving Data With Stored Procedures post:

SELECT A.ProductID LTProductID , B.ProductID RTProductID , A.Name LTName , B.Name RTName , A.ProductNumber LTProductNumber , B.ProductNumber RTProductNumber , A.MakeFlag LTMakeFlag , B.MakeFlag RTMakeFlag , A.FinishedGoodsFlag LTFinishedGoodsFlag , B.FinishedGoodsFlag RTFinishedGoodsFlag , A.Color LTColor , B.Color RTColor , A.SafetyStockLevel LTSafetyStockLevel , B.SafetyStockLevel RTSafetyStockLevel , A.ReorderPoint LTReorderPoint , A.StandardCost LTStandardCost , B.StandardCost RTStandardCost , A.ListPrice LTListPrice , B.ListPrice RTListPrice , A.Size LTSize , A.DaysToManufacture LTDaysToManufacture , A.ProductLine LTProductLine , A.Class LTClass , A.Style LTStyle , A.SellStartDate LTSellStartDate , B.StartDate RTSellStartDate , A.SellEndDate LTSellEndDate , B.EndDate RTSellEndDate , A.DiscontinuedDate LTDiscontinuedDate , B.CreationDate RT , B.CreatedBy RT , A.ModifiedDate LTModifiedDate , B.ModifiedDate RTModifiedDate , B.ModifiedBy RT , CASE WHEN IsNull(A.Name , '') <> IsNUll(B.Name, '') THEN 'Y' ELSE 'N' END DiffNameFlag , CASE WHEN IsNull(A.ProductNumber , '') <> IsNUll(B.ProductNumber, '') THEN 'Y' ELSE 'N' END DiffProductNumberFlag , CASE WHEN IsNull(A.MakeFlag , 0) <> IsNUll(B.MakeFlag, 0) THEN 'Y' ELSE 'N' END DiffMakeFlagFlag , CASE WHEN IsNull(A.FinishedGoodsFlag , 0) <> IsNUll(B.FinishedGoodsFlag, 0) THEN 'Y' ELSE 'N' END DiffFinishedGoodsFlagFlag , CASE WHEN IsNull(A.Color , '') <> IsNUll(B.Color, '') THEN 'Y' ELSE 'N' END DiffColorFlag , CASE WHEN IsNull(A.SafetyStockLevel , 0) <> IsNUll(B.SafetyStockLevel, 0) THEN 'Y' ELSE 'N' END DiffSafetyStockLevelFlag , 'n/a' DiffReorderPointFlag , CASE WHEN IsNull(A.StandardCost , 0) - IsNUll(B.StandardCost, 0) NOT BETWEEN -0.05 AND 0.05 THEN 'Y' ELSE 'N' END DiffStandardCostFlag , CASE WHEN IsNull(A.ListPrice , 0) - IsNUll(B.ListPrice, 0) NOT BETWEEN -0.05 AND 0.05 THEN 'Y' ELSE 'N' END DiffListPriceFlag , 'n/a' DiffSizeFlag , 'n/a' DiffDaysToManufactureFlag , 'n/a' DiffProductLineFlag , 'n/a' DiffClassFlag , 'n/a' DiffStyleFlag , CASE WHEN DateDiff(d, A.SellStartDate, B.StartDate)<>0 THEN 'Y' ELSE 'N' END DiffSellStartDateFlag , CASE WHEN DateDiff(d, A.SellEndDate, B.EndDate)<>0 THEN 'Y' ELSE 'N' END DiffSellEndDateFlag , 'n/a' DiffDiscontinuedDateFlag , 'n/a' DiffCreationDateFlag , 'n/a' DiffCreatedByFlag , CASE WHEN DateDiff(d, A.ModifiedDate, B.ModifiedDate)<>0 THEN 'Y' ELSE 'N' END DiffModifiedDateFlag , 'n/a' DiffModifiedByFlag FROM Production.Product A FULL OUTER JOIN Production.Products B ON A.ProductID = B.ProductID WHERE CASE WHEN IsNull(A.Name , '') <> IsNUll(B.Name, '') THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN IsNull(A.ProductNumber , '') <> IsNUll(B.ProductNumber, '') THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN IsNull(A.MakeFlag , 0) <> IsNUll(B.MakeFlag, 0) THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN IsNull(A.FinishedGoodsFlag , 0) <> IsNUll(B.FinishedGoodsFlag, 0) THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN IsNull(A.Color , '') <> IsNUll(B.Color, '') THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN IsNull(A.SafetyStockLevel , 0) <> IsNUll(B.SafetyStockLevel, 0) THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN IsNull(A.StandardCost , 0) - IsNUll(B.StandardCost, 0) NOT BETWEEN -0.05 AND 0.05 THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN IsNull(A.ListPrice , 0) - IsNUll(B.ListPrice, 0) NOT BETWEEN -0.05 AND 0.05 THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN DateDiff(d, A.SellStartDate, B.StartDate)<>0 THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN DateDiff(d, A.SellEndDate, B.EndDate)<>0 THEN 'Y' ELSE 'N' END = 'Y' OR CASE WHEN DateDiff(d, A.ModifiedDate, B.ModifiedDate)<>0 THEN 'Y' ELSE 'N' END = 'Y'

Notes:

1. The macro doesn’t consider an ORDER BY clause, though it could be easily added manually

2. Not all of the join constraints are so simple so that they can be reduced to one or more simple equalities, on the other side we have to consider that the most time consuming task is listing the attributes and the difference flags.

3. Sometimes it’s easier to create two extracts – in the first being considered all the records from the left table and the matches from the right table (left join), respectively all the records from the right table and the matches from the left table (right join).

4. Given the fact that the attributes participating in the join clause should in theory match, each pair of such attributes could be merged in one attribute using the formula: IsNull(A.ColumnX, B.ColumnY) As ColumnXY.

5. In order to show all the data from the two tables and not only the differences, all is needed to do is to change the value of the last parameter from true to false:

=GetComparisonQuery(A2:D23, "Production.Product", "Production.Products", "d", "0.05", false)

6. For TimeInterval parameter should be provided only the values taken by DatePart parameter (first parameter) of SQL Server’s DateDiff function.

7. Please note that no validation is made for the input parameters.

About Me

- Adrian

- IT Professional with more than 24 years experience in IT in the area of full life-cycle of Web/Desktop/Database Applications Development, Software Engineering, Consultancy, Data Management, Data Quality, Data Migrations, Reporting, ERP implementations & support, Team/Project/IT Management, etc.