|

| Business Intelligence Series |

When choosing the attributes for a report, there are several important sets of attributes which needs to be considered:

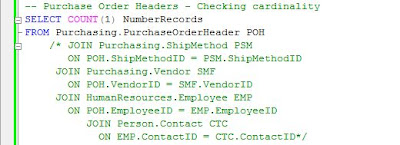

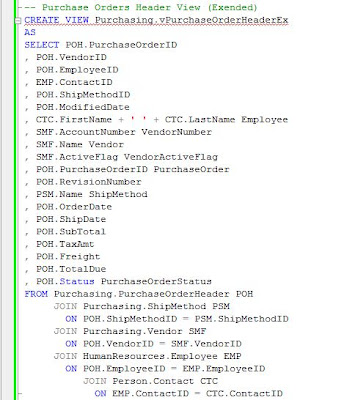

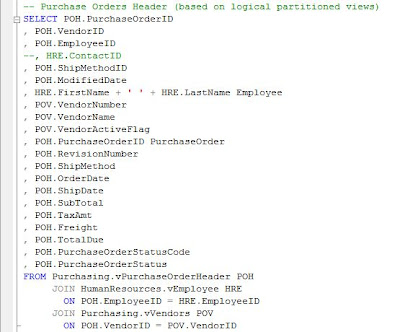

Unique identifiers

Together with the various Names (e.g. Vendor Name, Customer Name) associated with entities, a report should include also the “unique identifier” (UID) for each entity, even if formed from one or more attributes. The UID allows identifying for example if duplicate records appear in report or it could be used to match/join the data from the reports with other data sets in order to pull details or for further analysis of data. For example in a PO report over PO Shipments a unique (natural) key could be identified by using the PO Number, Line Number and Shipment Number; for a Vendor could be used the Vendor Name or the GSL (Global Supplier Location) Number, though the later it’s more adequate because it’s more general and accurate, making easier Vendor’s identification. In theory, for the same scope could be used also the database (surrogate) unique identifier from PO Shipments table, the elements dictating report’s level of detail, respectively the Vendor ID, though even if surrogate UID are easier to use in joins, they could create confusion and overload the reports, given that surrogate UIDs need to be provided also for the other elements.

Documents like Invoices include an external and internal unique identifier, the Invoice Number together with the Vendor, typically unique in a system, form the external UID, while the Document or Voucher Number is used as internal UID. The external UID it’s easier to use for external-based considerations, while the internal UID it’s easier to use for internal needs, so it makes sense to include both types of unique identifiers.

Quantities & Related Attributes

In Item-related reports, most of the times it makes sense to include also the quantities (e.g. Transaction, Ordered, Delivered, Invoiced, On-Hand Quantities) together with the Unit of Measure (UOM) in which they are represented. It has to be made distinction between the Primary UOM, the UOM in which the item is stored, and the Transactional UOM, the UOM in which the Item is transacted; for example the Purchasing UOM, Sales UOM or Transaction UOM could be different than the Primary UOM in which the item is stored in Warehouse. In such cases together with the Transactional UOM should be provided also the Primary UOM and eventually the UOM Conversion Rate, when applicable.

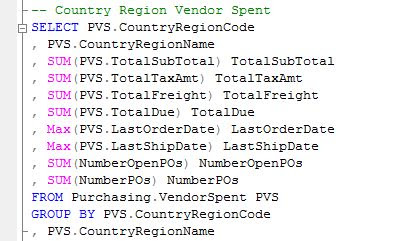

Prices/Amounts & Related Attributes

For Item-related reports and not only, include the various Prices (e.g. Sales, Purchase, Standard Price) together with the Currency Code used even if only one Currency is used, same rule applying also for the amounts stored (e.g. Invoice, Sales Order, Purchase amounts). For financial reports it’s advisable to show both functional amounts, the amounts in the Currency used by GL (General Ledger), and transactional amounts, the Currency used in the transaction. When the level of details allows it, show also the Quantity, Price Unit used to calculate the amounts, the eventual Exchange Rate or UOM Conversion Rate used. When available, include also the Period when the Amount was booked in the system.

Dates

Typically should be included the Document Date (e.g. Invoice Date, Order Date) and Document Creation Date, together with the other Dates important for the business or data analysis (e.g. Need By Date, GL Date, Value Date). In general the Document Date or Document Creation Date, and GL Date for financial reports, should be mandatory attributes because they could be used to segment (partition) a data set in time units (e.g. days, weeks, months, periods, years, etc.).

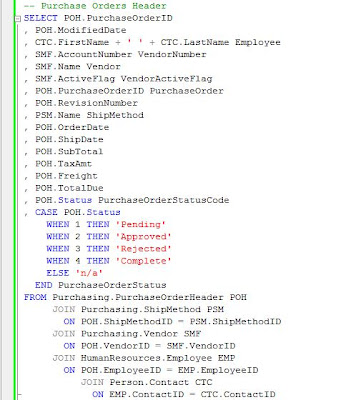

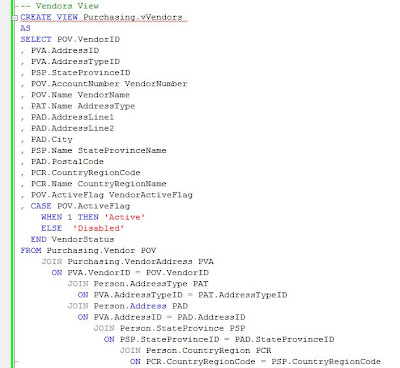

Statuses

The various record statuses and document statuses should be again mandatory attributes in reports. Record statuses show whether a record is active, was cancelled or marked as deleted, while document statuses show documents’ processing status, often being associated with a workflow (e.g. approval or processing workflows). The record statuses could be synchronized and even merged with the document statuses.

Either expressed as flags or list of values, statuses are essential in delimiting the data set that needs to be considered for further calculations, because often not approved documents or cancelled records have low or no relevance for the business. Not approved documents are typically not considered for the various calculations until they were not approved, while cancelled records are associated with mistakes or the lack of need. Not being able to identify the active records can mess things pretty badly, because for example there are reports that show only active, while others show all the data available in a system. Therefore showing of statuses in reports can be important in the mitigation of differences between reports, especially when dealing with calculations.

It’s advisable to have the possibility to see also the cancelled records, for example in order to analyze the amount of waste expressed as overwork or for identifying the records that were cancelled by mistake.

In reports with multiple levels of details, it can be useful to show the statuses from all levels, as statuses might not be in sync or because they have different meaning. In theory, when the statuses are in synch and especially when considering cancellations, it should be enough to consider the status from the lowest level of detail from each logical entity (e.g. PO Shipment Status when considering PO, Invoice Line status when considering Invoices, both mentioned statuses when considering POs together with Invoices), though reality can prove to be a tough world for statuses, as programming errors and other business scenarios need to be considered.

Action Owners

Include Requestors, Document Preparers, Buyers, Managers or any other type of action owners, so a user can track the direct or indirect issues back to them.

Note:

Such attributes can be used as base to calculate/reflect action owner’s performance, fact that can infringe country or organization regulations so you need to check if there are any constraints in this direction and which set of attributes might be impacted. For example might be no problem to show the Buyer, though might be a problem to show information about who created/modified the record. Eventually if needed to calculate the performance at action owner level, substitute any attribute that can be used to identify a person with a random value, however if the mapping between the action owner and value used as substitute is known (in case unique identifiers are used) or easy to get (by checking records in the system), the data might be misused.