Introduction

Even if I started to blog 3-4 years ago, only this year (still 2010) I started to allocate more time for blogging, having two blogs on which I try to post something periodically: SQL Troubles and The Web of Knowledge plus a homonym Facebook supporting group (for the later blog). As a parenthesis, the two blogs are approaching related topics from different perspective, the first focusing on data related topics, while the second approaching data from knowledge and web perspective; because several posts qualify for both blogs, I was thinking to merge the two blogs, though given the different perspectives and types of domains that deal with them, at least for the moment I’ll keep them apart. Closing the parenthesis, I would like to point out that I would love to allocate more time though I have to balance between blogging, my professional and personal life, and even if the three have many points in common, some delimitation it’s necessary. Because it’s the end of a year, I was thinking that it’s maybe the best time to draw the line and analyze the achievements of the previous year and the expectations for the next year(s), for each of the two blogs. So here are my thoughts:

Past and Present

There are already more than 10 years since I started to work with the various database systems, my work ranging from data modeling to database development, reporting, ERP systems, etc. I can’t consider myself an expert, though I’ve accumulated experience in a whole range of areas, fact that I think entitles me to say that I have something to write about, even if the respective themes are not rocket science. In addition, it’s the human endeavor of learning something new each day, and in IT that’s quite an imperative, the evolvement of various technologies requesting those who are working in this domain to spend extra hours in learning new things or of consolidating or reusing knowledge in new ways. I considered at that time, and I still do, that blogging helps the learning process, allowing me to externalize the old or new knowledge, clear my thoughts, have also some kind of testimony of what I know or at least a repository of information I could reuse when needed, and eventually receive some feedback. These are few of the reasons for which this blog was born, and I hope the information presented in here are useful also for other people.

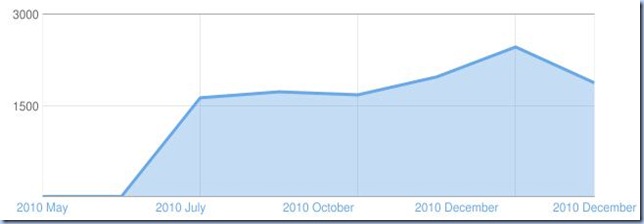

During the past year I made it to post on my blog more than 100 entries on various topics, the thematic revolving around strings, hierarchical queries, CLR functionality, Data Quality, SSIS, ERPs, Reports, troubleshooting, best practices, joins, etc. Not all the posts rose to my expectations, though that’s a start, hoping that I will find a personal style and the quality of the posts will increase. I can’t say I received lot of feedback, however based on the user access’ statistics provided by Clustrmaps and Google the number of visitors this year was somewhere around 8500, close to my expectations. Talking about the number of visitors, it’s nice to have also some visualization, so for this year’s statistics I’ll use Clustrmaps visualization, which provides a more detailed geographical overview than Google’s Stats, while for trending I show below Google’s Stats (contains data from May until today):

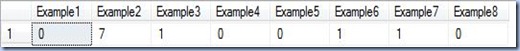

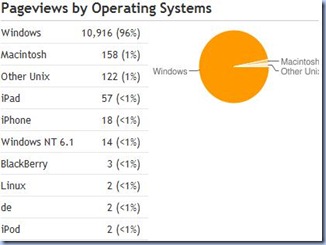

What I find great about Google’s Stats is that it provides also an overview of the most accessed posts and the traffic sources. There are also some statistics of the audience per browsers and OS, though they are less important for my blogging requirements, at least for the moment.

|  |

What I find interesting is that most visited posts and searched keywords were targeting SSIS and Oracle vs. SQL Server-related topics. So, if for the future I want more traffic than maybe I should diversify my topics in this direction.

Future

I realize that I started many topics, having in the next year to continue posting on the same, but also targeting new topics like Relational Theory, Business Intelligence, Data Mining, Data Management, Statistics, SQL Server internals, data technologies, etc. Many of the posts will be an extension of my research on the above topics, and I was thinking to post also my learning notes with the hope that I will receive more feedback. I realized that I need to be more active and provide more feedback to other blogs, using the respective comments as gateways to my blog and try to build a network around it. I was thinking also to start a Facebook “support group”, posting the links I discovered, quotes or impressions in a more condensed form, but again this will take me more time, so I’m not sure if it makes sense to do that. Maybe I should post them directly on the blog, however I wanted my posts to be a little more consistent than that. Anyway I know also that I won’t manage to post more than an average of one post per week though per current expectations is ok.

Right now all the posts are following a push model, in other words I push the content independently of whether there is a demand or not for it. It’s actually natural because the blog is having a personal note. In the future I’m expecting to move in the direction of a pull model, in other words to write on topics requested by readers, however for this I need more feedback from you, the reader. So please let me know what topics you’d like to read!

I close here, hoping that the coming year (2011) will be much better than the current one. I wish to all of you, a Happy New Year!